Gray-Scale (Black and White) Digital Camera: In a gray-scale camera, each pixel on the sensor captures information on the quantity of light striking it, or intensity, regardless of wavelength. Each pixel in the resultant image is then represented by an 8-bit number where 0=black and 255=white, and the intermediate numbers represent the range of gray between those two extremes.

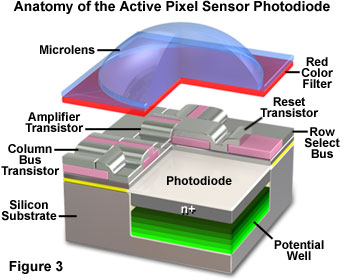

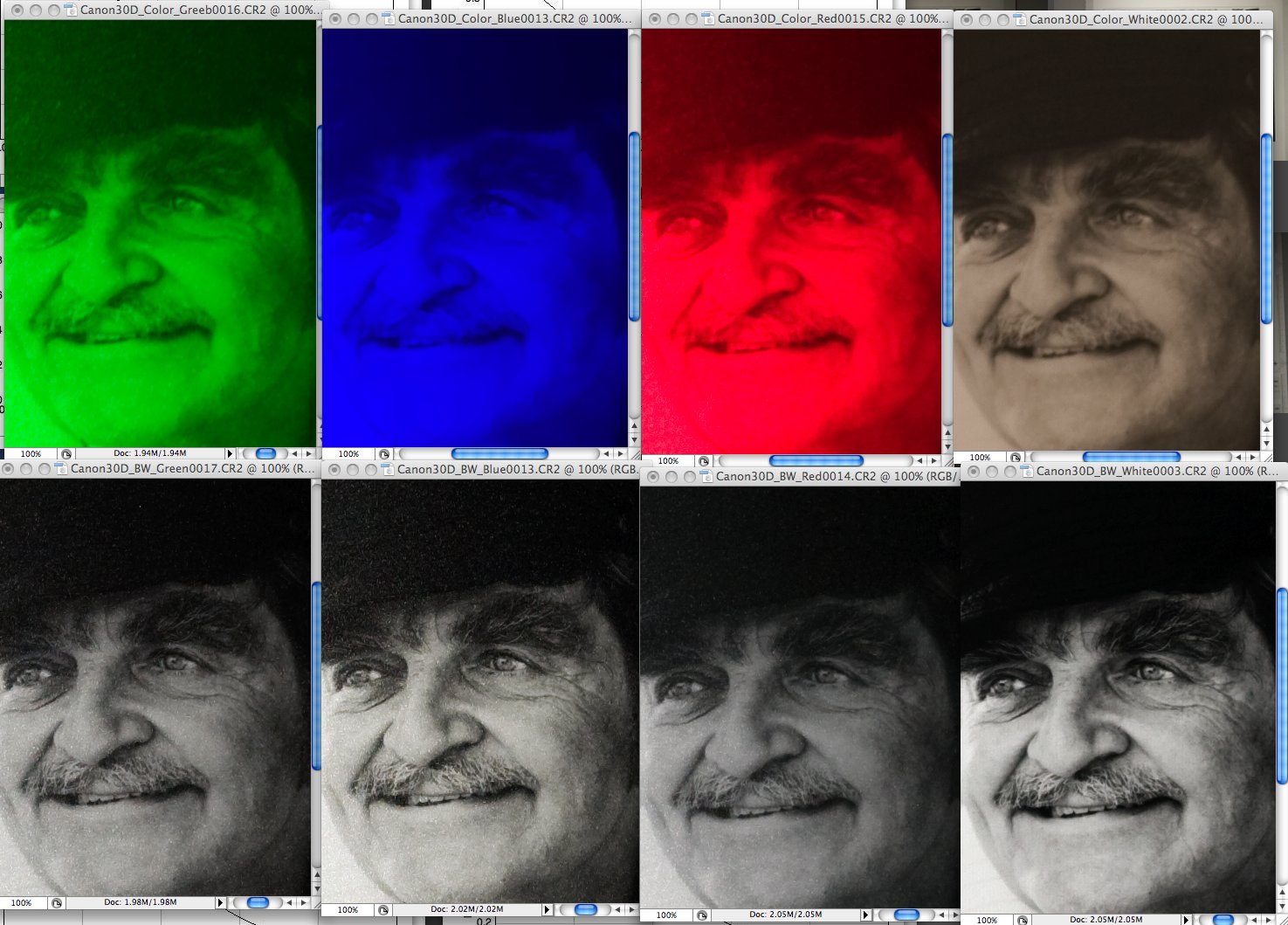

Color Digital Camera: In a color camera, each pixel is the same as those on a gray-scale camera with the exception that each pixel has a color filter in front of it so that it is measuring the intensity of the light striking it only in that color range. The color filters are either red, green or blue, measuring the intensity in the three primary colors.

Camera Resolution: Usually expressed in millions of pixels or megapixels, the camera’s spatial resolution is found by multiplying the number of horizontal pixels found on the sensor by the number of vertical pixels (e.g. a 1 Megapixel camera might have a 1,024 x 1,024 pixel sensor array).

Output Resolution: The output resolution is the spatial resolution of the final image created by the sensor in pixels. It is the same as the camera resolution, with the exception that a gray scale camera has only one intensity value per pixel, whereas in a color camera, each output pixel has three intensity values, one each for red, green and blue.

Bayer Filter Pattern: A Bayer filter is the most common color filter array (CFA) pattern used in color cameras. It is the arrangement of color filters in front of the monochrome pixels contained in a color camera (see Figure 2).

How a Color Image is Formed: As described in the definitions, a color camera actually uses a monochrome (gray-scale) sensor with a color filter array (CFA) in front of it. Typically the CFA is the Bayer pattern shown in Figure 2. Figure 3 shows the resulting pattern created by the CFA on the sensor for red, green and blue values. Note that there are actually two times (2X) the number of green sensors versus red and blue ones. The reason for this is because the human eye is most sensitive to green light, so having an emphasis on green yields an image which will be interpreted closest to ‚Äútrue color‚ÄĚ by the human eye.

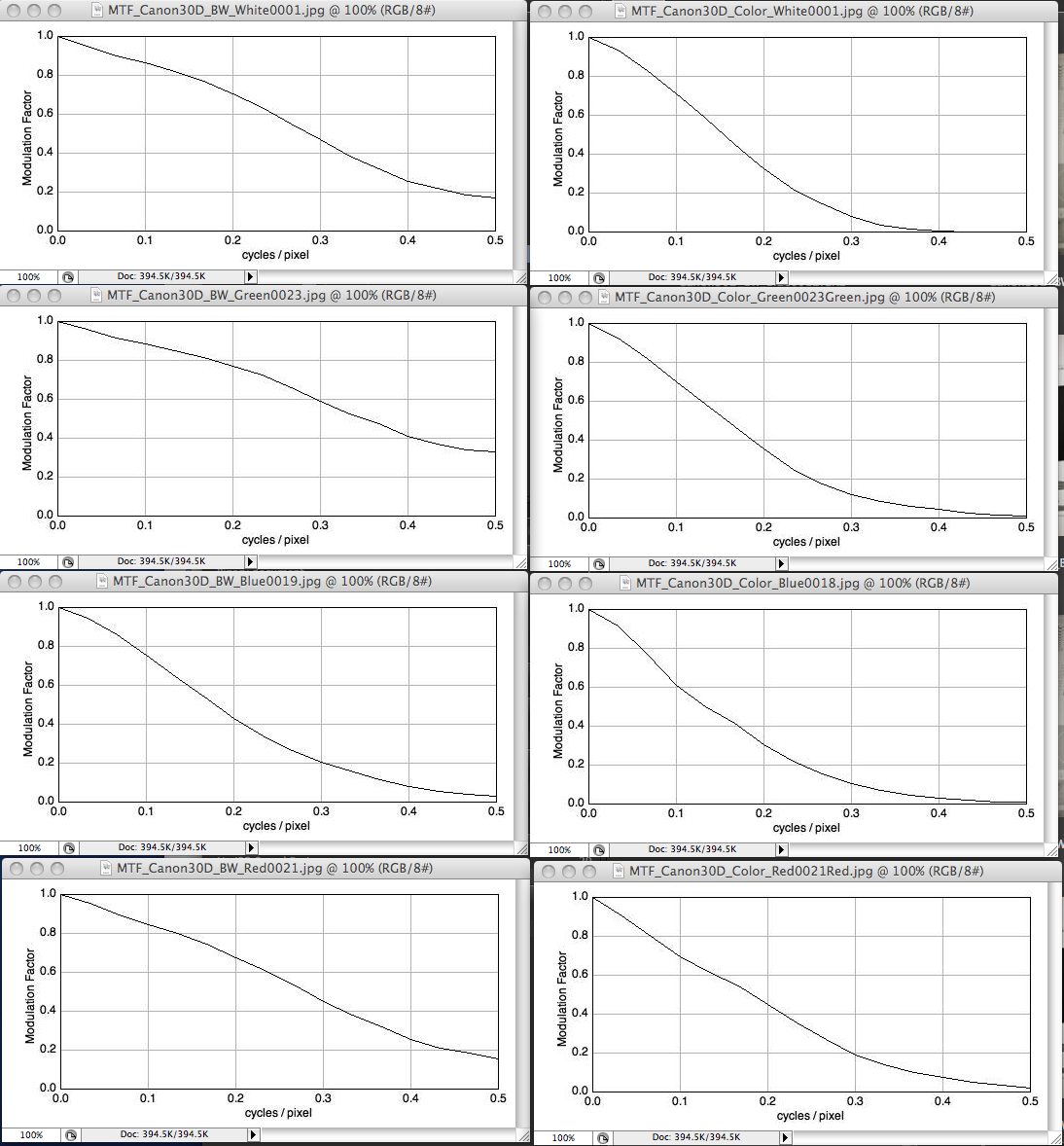

Demosaicing: If we were to take the direct output of the sensor as is, the output image would have far less resolution than the camera image. For example, a 1 Megapixel camera image would produce a 0.5 Megapixel green image, and 0.25 Megapixels each for the red and blue images. In order to reconstruct an output image of equal resolution to the original camera resolution, a process called demosaicing is used. Essentially, this process interpolates the two color values for each pixel that are missing by looking at neighboring pixel values for that color. There are many different interpolation algorithms, and each camera manufacturer uses their own. As a simple example, Figure 4 shows what is called bilinear interpolation, whereby for each pixel in the camera image, the neighboring nearest 8 pixels are considered.

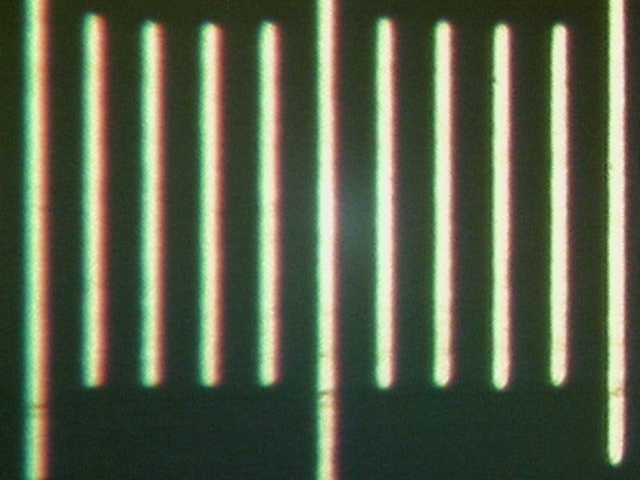

What This Means for FlowCAM Camera Choices: Since color sensors are merely gray-scale sensors with a CFA overlay, there is really no such thing as a ‚Äúcolor‚ÄĚ camera. As shown above, the CFA creates a color image by sampling the three primary colors (red, green and blue) separately in physically different locations, and then making up color values at other locations via interpolation. This means that a color camera has inherently lower resolution than a gray-scale camera will. Using demosaicing, the output image is brought up to the original camera resolution with RGB values at each pixel. So, in reality, the difference in resolution is not as great as it initially appears. Where it will show the most will be on edges, where color aliasing will occur, as simulated in Figure 5.

Color versus Black & White Camera Trade-offs: As detailed above, when choosing which camera to use in your FlowCAM, the primary trade-off to be considered is spatial versus color resolution. The monochrome camera will give you higher spatial resolution. This is particularly important when looking at objects which are relatively small compared to the calibration factor at the magnification being used, where every pixel counts in determining size and shape measurements. An example of this would be 8őľm-10őľm size particles when using the 10x magnification, where calibration is around 0.6őľm/pixel.

When making this decision, it is always important to keep in mind the end goal of imaging particle analysis, which is to separate and characterize different particle types in a heterogeneous mixture. This is done by filtering the particles using either the value or statistical filtering capabilities of VisualSpreadsheet¬ģ. If the color information is particularly useful for an application, such as in identifying plankton, then a color camera may be appropriate. Keep in mind that FlowCAM is a back-lit (brightfield) system whereby opaque particles will only be seen as a black silhouette against a white background, so there is no benefit to color in these situations. Even when the particles are transparent, if there is no strong color component to distinguish them from other particles, the loss in spatial resolution caused by using the color camera may be counter-productive to particle characterization.

____________________________________________________________________________________________________________

Why can color cameras use lower resolution lenses than monochrome cameras?

The difference between the usual color and monochrome ‚Äď ( ‚ÄĚ black and white ‚ÄĚ ‚Äď ) cameras is an additional layer of small color filters , mostly arranged in the so-called ‚ÄĚ Bayer pattern ‚ÄĚ (patented by Bryce E. Bayer, 1976, employee of Eastman Kodak ) .

In a perfect world, a lens would map any ( arbitrarily small ) point on the object to a ( arbitrarily small ) point on the sensor. Whatever small pixel sizes could be supported .

Unfortunately, by physical laws, it is only possible to illuminate little light disks( = ‚ÄĚ Airy disks ‚ÄĚ ). The specified number of megapixels for a lens are a rough measure of the size of¬† these disks (we talk about some micrometers here) . The more megapixels, the smaller the disks.

If the image of a monochrome sensor got to be in focus, the disks must fit in the footprint of one  pixel .

On a color sensor with ‚ÄúBayer pattern ‚Ä̬† there are red pixels only in every other column and row. Here, disks of light that fit in a single pixel , may even be undesirable:

The disks should be so large, that one red, one blue and two green pixels are covered, eg 2√ó2 pixels in size . This means that the lens compared with the lens for a monochrome application may and maybe even should have a reduced resolution. To avoid the color Moir√© we can use an objective with lower resolution , as stated above , or an (expensive ! ) so called . ‚ÄĚ OLP ‚ÄĚ filter that ensures a guaranteed minimum blur of 2 pixels . Such filters are asked if customers request videoconferencing and want avoid Color moir√© . OLP = ‚ÄĚ Optical Low Pass filter‚ÄĚ

This can be done, because every pixel has direct neighbours in the other colors. Say, for the location of a green pixel the red intensity is predicted(!) by the intensity of the red pixels in the direct neighbourhood. As a side effect, the RGB image is larger on the computer than on the sensor!